In this post I want to group all the content related with the CySA+ certification that I considere important and worth to keep in this site. I want to clarify that some important content may not be added because I feel that I already know and it is not worth to spend that extra time.

Threat Intelligence Sharing

Security vs Threat Intelligence

Security Intelligence is the process through which data generated in the ongoing use of systems is collected, processed, analyzed and disseminated in order to provide a security status of those systems.

Threat Intelligence is the process of collecting, investigating, analyzingand disseminating information about emerging threats to obtain an external threat landscape.

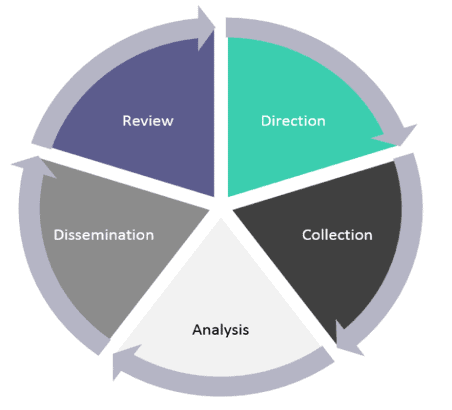

Intelligence Cycle

Security intelligence is a process. You can see diferents schemas in the internet and they may be a bit different (some of them group steps into a unique step), but the overall idea is the same.

- Requirements, Planning and Direction: In this phase the goals for the intelligence gathering effort/cycle is set.

- Collection and Processing: The collection of the data can be done by software tools and SIEMs. Afterwards, this data is processed using Data Enrichment processes with the goal to keep relevant data.

- Analysis: The analysis of the processed data is performed against the use cases decided from the Requirements, Planning and Directon phase. This step can make use of auto model analysis, using machine learning and artificial intelligence.

- Dissemination: In ths phase the results obtained by the analysis is published to consumers so other teams can take action. They can be classified using the type of intelligence they refere:

- Strategic Intelligence: related with broad things and objectives. They are usually reports to executives, power point slides, etc.

- Operational Intelligence: Adresses the day to day priorities of the specialists.

- Tactical Intelligence: They refere to real time decisions, like alerts detected by the SOC.

- Review/Feedback: This phase aims to clarify the requirements and improve the the Collection, analysis and dissemination phases for the next cycle by reviewing the current inputs and outputs. Usually the feedback phase takes into account:

- Lessons learned

- Measurable success

- Evolving threat issues

Intelligence Sources

The Collection and Processing step of the Intelligence Cycle, we have to analyze the sources that are used to obtain the data. We should considere this properties of the sources and data:

- Timeliness: Property of a source that ensures that it is up-to-date.

- Relevancy: Property of a source that ensures that it matches the use cases intended for it and that the data that the source provides is relevant.

- Accuracy: Property of a soruce that ensures that the results are accurate and effective.

- Confidence Levels: Property of a source that ensures the produced statements are reliable.

The places where we obtain the data can also be classified:

- Proprietary: Comercial services offering acces to updates and research data.

- Close-source: Data obtained from the own provider’s research.

- Open-Source: Public available data from public databases.

- US-CERT

- UK NCSC

- AT&T Security

- MISP (Malware Information Sharing Point)

- Virus Total

- Spanhaus

- SANS ISC Suspicious Domains

OSINT are the methods to obtain information through public records, websites, and social media. We will talk about it later.

ISACS: Intelligence Sharing and Analysis Centers

The ISAC is a non-profit group set to share sector-specific threat intelligence and security best practices (CISP is the same but in the UK). There are diferent types of ISACS, classified using the sector: - Critical Infrastructure - Goverment - Healthcare - Financial - Aviation

Threat Intelligence Sharing (within the organitzation)

In the Dissemination phase, is important to share the information with the corresponding teams to act according to the situation (make use of the data).

- Risk Management: Identify, evaluate and prioritizes threats and vulnerabilities to reduce the impact.

- Incident Response: Organized approach to address security-breach/cyber-attacks.

- Vulnerability Management: Identify, classify and prioritize software vulnerabilities.

- Detection and Monitoring: The practice of observing to identify anomalous patterns to analyze them further.

Classifying Threats

Types of Malware

- Commodity Malware: This type of malware is available to purchase or it is free. They exploit a known vulnerability. Is used by a wide range of threat actors.

- Zero-day: Malware that exploits a zero-day vulnerability, which means that is a vulnerability that has just been discovered.

- APT - Advance Persistent Threats:They are usually performed by Organized Crime and once they obtain access, they mantain it in order to obtain information. The information is obtained by the malware and sent to the Command and Control (C2), a infrastructure of hosts and services with which the attackers direct, distribute and control the malware over bots/zombies (botnets).

Threat Research

Threats used to be identifyed by a signature (part of the threat that is re that allowed them to be recognizable). However, obfuscating techniques have become better and searching for the signature is no longer the best option. Some different ways to identify threats can be:

- Reputational Threat Research: This consists of a blacklists of known threat sourcesn like signatures, IP addresses ranges and DNS domains. If something is dettected comming from thoose sources, is classified as a threat.

- Indicator of Compromise (IOC): Thoose are residual signs that an asset or network have been compromised (or is beeing attacked). Modified files, excessive bandwith, unknown port ussage and suspicious emails can be IOC. If the attack is still going on, instead of saying it is a IOC, we will say it is a Indicator of Attack (IOA).

- Behavioral Thread Research: This detection technique is based on Tactics, Techniques and Procedures used by the threats. They correlate the IOC with attack patterns. Some examples can be:

- DDoS

- Viruses and Worms

- Network Reconnaissance

- APT

- Data Exfiltration

The C2 that the APT’s use may do Port Hopping technique in order to use different ports and make them more difficult to detect. Another technique that they may use is Fast Flux DNS, which consists on changing the IP address associated with a domain frequently.

Attack Frameworks

A Kill Chain is a framework that was first introduced by Lockheed Martin (military US company) and it describes the stages bw which a threat actor progresses in a network intrusion attack.

- Reconnaissance: The attacker determines the methods that will be used to continue with the attack by gathering information about the victim. It can be used Passive or Active information gathering.

- Weaponization: This is the step were the malware/exploit is developed considering the detected vulnerabilities.

- Delivery: In this step the method that will be used to introduce the attack will be identified.

- Explotation: This step occurs when the exploit/malware is successfully introduced.

- Installation: When the malware gets executed, this step allows to obtain a remotr access tool to the victim and achieve persistance.

- Comand and Control (C2): Establish a outbound channel to a reomote server (botnet) to progress withthe attack.

- Actions on Objectives: Attackers make use of the access achieved to collect what they want.

The MITRE ATT&CK Framework is a knowledge base that consist of a matrix where different tactics and techniques used by the attackers are described. You can check it here https://attack.mitre.org/matrices/enterprise/.

Last but not least, we have the Diamond model of Intrusion Analysis. This framework analyzes the security incidents by exploring the relationship between four features: adversary, capability, infrastructure and victim. This is a complex model, that can also help to create Activity Threads and Activity Attack Graphs. Fore a more detailed information, I suggest reading this post: https://www.socinvestigation.com/threat-intelligence-diamond-model-of-intrusion-analysis/.

Indicator Management

It is important to use a normalized way when sharing information about threats.

STIX (Structured Thread Information eXpression) is a standar terminology for IOCs and a way to indicate relationships between them. It is expressed in JSON. It has objects that contain multiple attributes with their corresponding value:

- Observed Data

- Indicator

- Attack Patterns

- Campaign agains threat actor

- Courses of Action (mitigation techniques used to reduce the attack)

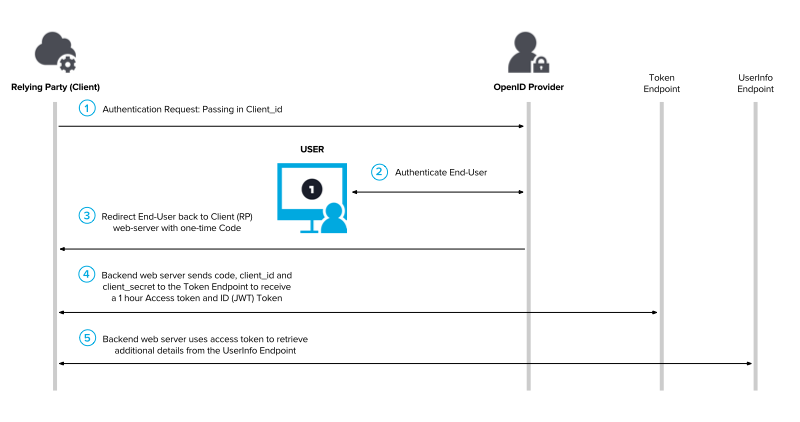

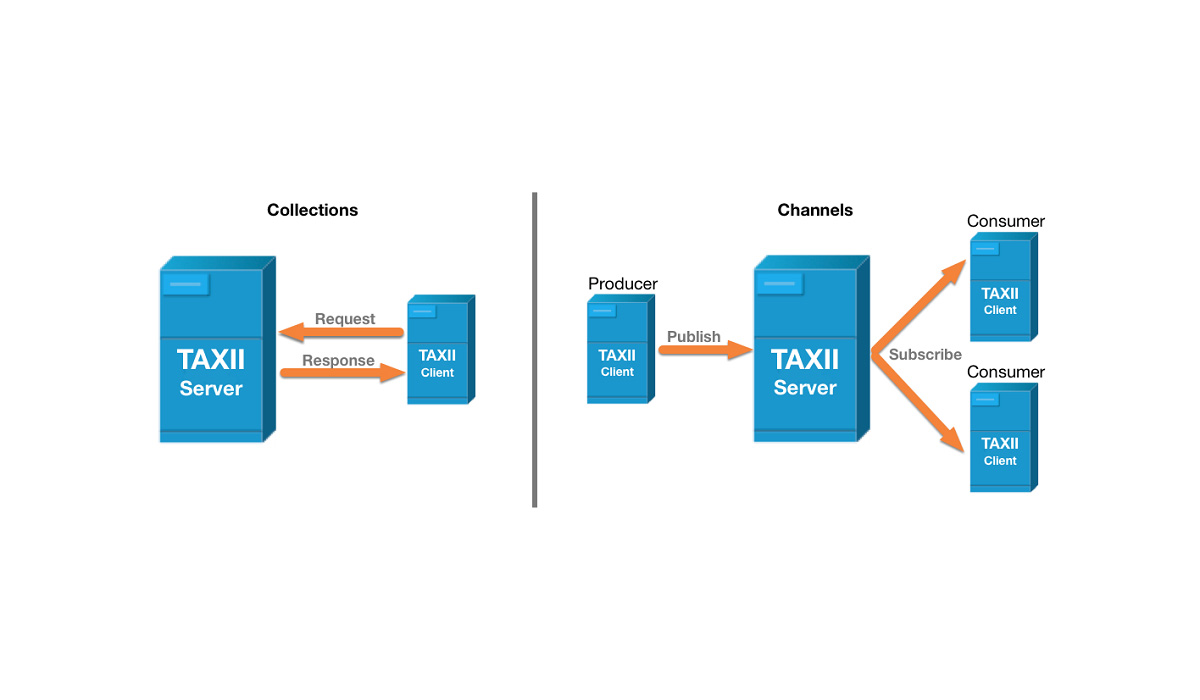

TAXII (Trusted Automated eXchange of Indicator Information) is a protocol for supplying codified information to aoutomate incident detectiond and analysis. The analysis tools provide updates of the threats using this protocol.

OpenIOC is a framework that uses XML files for supplying codified information to automate indicent detection.

MISP (Malware Information SHaring Protocol) provides a server platform that allows cyber intelligence sharing. It supports OpenIOC definitions and can receive and send information using STIX over the TAXII protocol.

Threat Hunting

Threat hunting is a technique designed to detect presence of threats that have not been discovered by normal security monitoring. Is also less disruptive than a penetration test.

Threat Modeling

Threat modeling is a structured approach to identifying potential threats and vulnerabilities in a system, network, or application. The goal of threat modeling is to understand the potential attack vectors and to identify and prioritize the risks associated with them. This process typically involves:

- Identify the attack vectors

- The impact of the attack in terms of confidentiality, integrity and availability of the data

- Identify the likelihood of the attack to occur

- What mitigations can be implemented

The information gathered during threat modeling can then be used to inform security design and implementation decisions.

The Adversary Capability can be classified to determine de resources and expertise availabe by the threat actor: Aquired and augmented, Developed, Advanced and Integrated

The Attack Surface can be classified to determine the points where a network or app receives external connections that can be exploited: Holistic network, Websites and cloud-services and Custom software applications.

The Attack Vector is the methodology used by the attackers to gain acces to the network or exploit a gain unauthorized acces: Cyber, Human and Physical.

The Likelihood is the chance of a threat being exploited.

The Impact is the cost of a security incident. Usually expressed in cost (money).

OSINT (Open-Source Intelligence)

All the public information and tools that can be used by the attacker to obtain specific data about a victim is classified as OSINT. It can allow the attacker to develop a stretegy for compromising the victim. Here you can find all the OSINT framework with different tools that can be used and public information.

Some of the most used and know ones are:

Google Hacking: Use Google search advance operators (“”, NOT, AND/OR, and keywors to determine the scope of the search, such as site, flietype, related,…) to locate desired information. You can visit the Google Hacking Database to obtain usefull queries.

Shodan: shodan.io is a search engine optimized for identifying vulnerable Internet-attached devices. It can allow you to search, for example, open ssh ports facing the internet without having to do an active scan.

Profiling Techniques such as Email Harvesting can be used to try to guess valid and existing email addresses for a specific domain.

Harvesting Techniques such as whois command, DNS Zone Transfer (method that asks for a replicated DNS database across a set of DNS servers that will reply if they are missconfigured) and DNS/Web Harvesting are other OSINT tools used to gain information about subdomains, source code, hosting providers, comments in the website code, etc.

If you want to know more about OSINT and passive information gathering, you can read this other post Passive Information Gathering that I wrote when I was studying for the OSCP certification.

Network Forensics

Tools

In order to analyze network traffic, it must be captured and decoded.

Switched Port Analyzer (SPAN): This is a feature that can be activated in a switch. This feature, also known as port mirroring, makes a copy of the traffic seen on a single port or multiple ports and sends the copy to another port (usually a monitoring port which will process the packets).

Packet Sniffer: This can be a fisical debice connected to a network or a software program that uses records data frames as they pass over the network. Deppending on the placement of the sniffer inside the network, it will be able to sniff more or less data.

- Wireshark and tcpdump are software programms that can be used to sniff traffic.

Flow Analysis

There are different ways to analize the flow that you capture:

- Full Packet Capture (FPC): The entire packet is captured (header+payload).

- Flow Collector: It just records mettadata and statistics about the traffic blow, but not the traffic itself.

- NetFlow: This is a standard developed by CISCO and is used to report the nerwork flow into a structured database. It includes:

- Network protocol interface

- Version and type of IP

- Source and destination IP

- Source and destination port

- IPs ToS (Type of Service)

- NetFlow: This is a standard developed by CISCO and is used to report the nerwork flow into a structured database. It includes:

- Zeek: This is a hybrid tool that monitors the network in a passive form and only logs relevant data. The events are stored in JSON format.

- Multi Router Traffic Grapher (MRTG): This tool creates graphs that show traffic flows through the network interfaces of routers and switches by using SNMP (Simple Network Management Protocol).

IP and DNS Analysis

There are Known-bad IP/DNS addresses, which are range of addresses taht appears in blackists and can help to detect if the traffic is malicious.

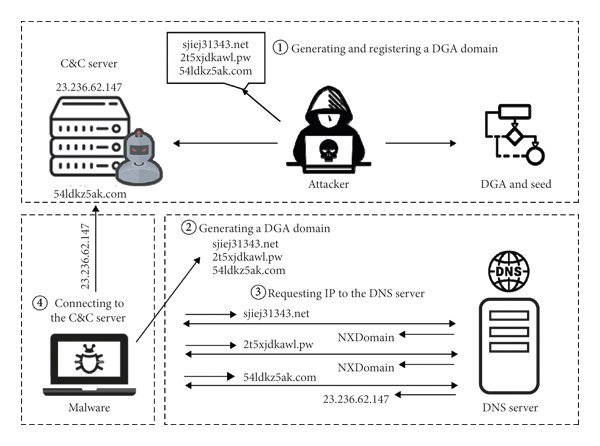

Recent malware uses Domain Generation Algorithms (DGA) to evade blackists. The purpose of a DGA is to make it harder for security researchers and network defenders to identify and block the C2 servers used by the malware.

The algorithm usually uses a seed value and an algorithm to generate a large number of domain names. The seed value can be based on a specific date, time or some other value that is known to both the malware and the C2 server. The algorithm then generates a large number of domain names by applying the seed value to the algorithm.

Some of the common techniques used by DGA are:

1

2

3

Using a predefined set of words or characters and applying mathematical operations to them.

Using encryption functions to generate domain names.

Using a combination of words or characters that are unlikely to be registered by legitimate domain owners.

Once the domain names are generated, the malware will try to connect to each one of them in a specific order, until it finds the C2 server. The C2 server will then be used to download additional malware, exfiltrate data, or receive commands.

A Fast Flux Network (FFN) is another method used by the malware to avoid being detected. FFN is a type of botnet that uses a technique to hide the true location of a command-and-control (C2) server by constantly changing the IP address associated with a specific domain name using a technique known as “fast flux” where the IP address associated with the domain name changes frequently. The malware infects a large number of machines and turns them into “proxies” for the C2 server. The main goal of a FFN is to evade detection by making it difficult to identify and block the C2 server used by the malware.

URL Analysis

Another way to detect a possible attack is to perform a URL Analysis.

Percent-encoding, also known as URL encoding, is a technique used to encode special characters, such as spaces, slashes, and ampersands, that are not allowed in URLs so that they can be transmitted safely. It replaces these characters with a percentage sign followed by the ASCII code of the character in hexadecimal form. This technique is also important for preventing cross-site scripting (XSS) attacks by encoding special characters that could be used in an XSS attack so that they are not executed by the browser.

Here you can find a list with the more usefull ones:

| Symbol Representation | Encoding |

|---|---|

| ” “ (Space) | %20 |

| “&” (Ampersand) | %26 |

| ”+” (Plus) | %2B |

| ”,” (Comma) | %2C |

| ”/” (Forward slash) | %2F |

| ”:” (Colon) | %3A |

| ”;” (Semi-colon) | %3B |

| ”=” (Equals) | %3D |

| ”?” (Question mark) | %3F |

| ”@” (At sign) | %40 |

| ”$” (Dollar sign) | %24 |

| ”#” (Pound sign) | %23 |

| ”<” (Less than) | %3C |

| ”>” (Greater than) | %3E |

| ”’” (Single quote) | %27 |

| ””” (Double quote) | %22 |

Network Monitoring

Firewalls

Firewall Logs keep a lot of usefull data that can be used to detect suspicious behaviour. They keep the connections that have been alowed or denyed, the protocols used, the bandwith usage, NAT/PAT translation, etc. The rules that the firewall will follow for deciding the permitted and denied connections will be defined in the Access Control List (ACL)

The format of the logs will be vendor specific:

- iptables This is a Linux based firewall that uses the syslog file format to store the data. The logs have a code that can help to identify the severitoy of log.

| Code | Severity | Description |

|---|---|---|

| 0 | Emergency | The system is unusable. |

| 1 | Alert | Action must be taken immediately. |

| 2 | Critical | Critical conditions. |

| 3 | Error | Error conditions. |

| 4 | Warning | Warning conditions. |

| 5 | Notice | Normal but significant condition. |

| 6 | Informational | Informational messages. |

| 7 | Debug | Debug-level messages. |

- Windows Firewall It uses W3C Extended Log File Format. This is a format used by web servers to record information about requests made to the server, while syslog is a standard used to send log messages from network devices to a centralized log server.

When a Firewall is under-resourced and logs can’t be collected fast enough, an attacker could exploit this by sending a lot of thata and overwhelming the firewall, hence the traffic can’t be collected properly nor detect unauthorized access. This is known as a Blinding Attack

Firewall Configurations

It is important to study where the Firewall will be placed in the network. However, this will be diffent for each use case.

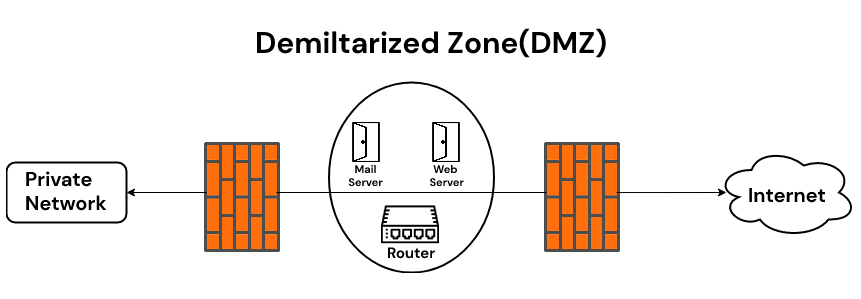

- Demilitarized Zone DMZ: If your network has servers that exposes services (like web pages) to the Internet, they are usaly in a independent subnetwork, isolated from the internal network of the company. Since thoose services are exposed to the Internet, are more vulnerable and is important to add a separation between them and your other servers/workstations. This DMZ zone usually has a Firewall facing the internet, allowing trafic related with the services exposedand another one between the DMZ and the internal network, more restrictive.

It is also important that the ACLs are processed from top-to-bottom, this means that the most specific rules have to be in the top and the generall ones at the end. Some principles for a good ACL configuration are:

- Block incoming requests from private, loopback and multicast IP address ranges.

- Block protocols that should only be used locally and not received from the internet, like: ICMP, DHCP, OSPF, SMB.

- Authorize just known hosts and ports to use IPv6

A firewall can DROP a packet or REJECT it. When the deny rule REJECTS the packet, it explicitly sends a response saying that the traffic has been rejected. However DROPPING it will just ignore the packet and the sender won’t receive any answer, which will difficult the work (for example, mapping the ports and network) if it is a malicious adversary. Dropping can be used to create a Black Hole and avoid DoS and DDoS attacks by sending the traffic to the null0 interface and not sending a response.

You can also configure your Firewall to send to the Black Hole all unused IP addreses within your network, because they should not be used, and if usage is dettected it is unauthorized.

A Sinkhole is like a Black Hole but instead of sending the traffic to the null0 interface, it is redirected to another subnet for further investigation.

Is also important to observe the eggress traffic. A host could have been infected by malware and communicating with the internet (Comanda and Control servers). Best practice for configuring egress traffic are:

- Allow whitelisted apps, ports and destination addresses

- Restrict DNS lookups to trusted DNS services

- Block access to known bad IP address ranges (blacklist)

- Block all internet access from host that don’t use internet

Proxy Logs

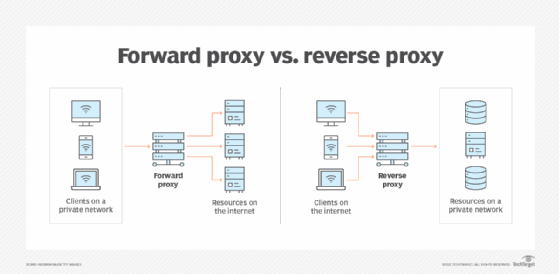

A proxy is a server that acts as an intermediary between a client and another server (e.g., a web server). It can be a Forward or Reverse proxy, and they can also be transparent and Nontransparent.

Forward Proxy: A forward proxy is a proxy that is used by a client to access resources on a remote server. The client sends a request to the forward proxy, which then forwards the request to the remote server, and returns the server’s response to the client. Imagine a company that makes all its workstations to go through a proxy before going to the internet. This situation will imply a forward proxy.

Reverse Proxy: A reverse proxy is a proxy that is used by a server to handle client requests. It is the reverse situation from the forward proxy. Instead of forwarding packets from the clients to the servers, it collects packets received through the internet and redirects to different servers according to the situation.

A nontransparent proxy is the proxy that has to be configured in the client browser, specifying the proxy IP and port (for example Burpsuite). A transparent proxy is a type of proxy server that intercepts and forwards requests and responses to the intended destination without the client being aware of it. It is used for caching frequently requested content, blocking certain types of content, translating IP addresses for clients on a private network to access the internet, and requiring authentication for access control. The client does not need to be configured to use it as it operates in a transparent mode.

Proxy Logs can be analyzed searching for indicators of attack.

Web Application Firewall Logs (WAF)

A Web Application Firewall (WAF) is a security tool that protects web applications from malicious attacks by analyzing incoming traffic and comparing it to predefined rules or patterns. If the traffic matches a known attack, such as SQL injection, XML injection, XSS, DoS, etc. the WAF takes action to block the request. It can be implemented as software or hardware, and can be a standalone solution or part of other security products.

Usually the WAFs store their logs in JSON format and they contain: - Time of event - Severity of event - URL parameters - HTTP method used - Context for the rule

Intrusion Detection System (IDS)

An IDS is a type of security software or hardware that is designed to detect and alert on unauthorized access or malicious activity on a computer network or system.

IDS systems work by continuously monitoring network traffic for suspicious patterns, anomalies, or known malicious activity. They can be set up in two different ways:

Network-based IDS (NIDS): These systems monitor all the traffic that flows through a network, looking for suspicious activity. They are placed at strategic points in the network to monitor traffic from all devices connected to the network.

Host-based IDS (HIDS): These systems monitor the activity on a single host or device, such as a server or a workstation. They are installed on the host itself, and monitor the system logs, process activity, and other data to detect any suspicious activity.

When an IDS detects suspicious activity, it generates an alert or alarm, which can be used to alert network administrators or trigger an automatic response, such as blocking the offending IP address or shutting down the affected service.

Intrusion Prevention System (IPS)

An IPS is a security technology that is similar to an IDS, but with the added capability to take action to prevent unauthorized access or malicious activity on a computer network or system. The actions that an IPS can take include:

- Blocking traffic from a specific IP address or network

- Closing a specific network port

- Quarantining a device on the network

- Logging off a user

An IPS is considered more advanced than an IDS, as it can prevent malicious activity from occurring in real-time, rather than just detecting it and raising an alert. However, it’s important to note that IPS systems, like any other security device, can produce false positives and negatives, so it’s crucial to have a well-configured and fine-tuned system to maximize its effectiveness.

Snort is an open-source, free, and widely-used Intrusion Detection and Prevention System (IDPS) tool. It can be used as both a network-based IDS (NIDS) and a host-based IDS (HIDS) depending on the configuration.

Snort uses a rule-based language to define what it should look for when scanning network traffic. Each rule is made up of several different parts, including:

- Action: This is the first part of the rule, and it specifies what action Snort should take when the conditions specified in the rule are met. For example, an action can be “alert”, “log”, “pass”, “activate”, “dynamic” among others.

- Protocol: This specifies the protocol that the rule applies to, such as TCP, UDP, or ICMP.

- Source and destination IP addresses: These specify the IP addresses that the rule applies to. They can be either a specific IP address or a range of IP addresses.

- Source and destination ports: These specify the ports that the rule applies to. They can also be either a specific port or a range of ports.

- Direction: This field specifies the direction of the rule, either “->” (from source to destination) or “<>” (bidirectional).

- Options: This field is used to specify additional conditions that Snort should look for, such as specific content in the packet, specific flags set in the packet header, or specific values in the packet payload.

- Message: This field is used to specify a message that will be displayed when the rule is triggered.

An example of a Snort rule:

1

2

alert tcp $EXTERNAL_NET any -> $HOME_NET 22 (msg:"SSH Brute Force"; flow:to_server,established;

threshold:type limit,track by_src,count 2,seconds 1; classtype:attempted-recon; sid:10000001; rev:1;)

This rule will trigger an alert when it detects a TCP connection coming from the external network to the home network on port 22 (SSH) with the message “SSH Brute Force”. It’s also configured to track the connection by source IP and only trigger the alert when it detects 2 connections in 1 second.

Network Access Control (NAC) Configuration

Network access control (NAC) provides the means to authenticate users and evaluate device integrity before a network connection is permitted.

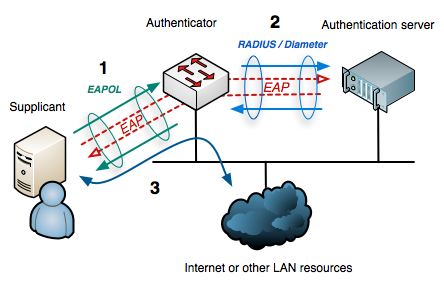

IEE 802.1X is a standard for port-based Network Access Control (NAC) that provides a framework for authenticating and controlling access to a network. It is commonly used in wireless networks and wired Ethernet networks to provide a secure connection for devices.

The 802.1X protocol works by using a supplicant (the device that wants to connect to the network) and an authenticator (a network device such as a switch or wireless access point) to establish a secure connection.

The basic process for 802.1X authentication is as follows:

- The supplicant (device) attempts to connect to the network.

- The authenticator (switch or Access Point) receives the connection attempt and sends an EAP-Request/Identity message to the supplicant, requesting the device’s credentials.

- The supplicant sends an EAP-Response/Identity message containing the device’s credentials (such as username and password) to the authenticator.

- The authenticator forwards the credentials to an Authentication Server (using RADIUS protocol) to verify the device’s identity.

- The Authentication Server verifies the credentials and sends an EAP-Response message indicating whether the device is authorized to access the network.

- If the device is authorized, the authenticator sends an EAP-Success message to the supplicant, allowing the device to access the network.

802.1X also supports other types of authentication methods, such as certificate-based authentication and token-based authentication, in addition to the username and password based authentication.

All the steps above use EAP messages. However, the communication between the Supplicant and the Authenticator uses EAPOL and the communication between the Authenticator and the Authenticatos Server uses RADIUS.

In summary, EAP (Extensible Authentication Protocol) is a framework that defines a standard way to provide authentication and security for wireless networks, EAPOL (EAP over LAN) is a network protocol that is used to carry EAP messages over LANs, and RADIUS (Remote Authentication Dial-In User Service) is a network protocol that is used to authenticate and authorize users attempting to connect to a network. Together, these protocols provide a flexible and secure way to authenticate and control access to a network using 802.1X.

SSID (Service Set Identifier) is a unique name that is assigned to a wireless network. An SSID is used to identify and differentiate between different wireless networks. BSSID (Basic Service Set Identifier) is a unique identifier that is assigned to each wireless access point or router that is part of a wireless network. Each wireless access point or router in a wireless network has a unique BSSID. When a device connects to a wireless network, it uses the SSID to identify the network, and then uses the BSSID to connect to a specific access point or router within that network.

Endpoint Monitoring

A endpoin monitoring is a tool that monitors the performance and status of various devices and systems. They are different from the network monitoring tools because this tools are situated in the endpoint devices instead of the network.

Some tools that are consdered endpoint monitoring tools are:

- Antivirus: Software capable of detecting and removing virus infections and other type of malware, such as worms, Trojans, rootkits, adware, spyware, etc.

- HIDS and HIPS: This are the Host-Based versions of the IDS and IPS and instead of analyzing the network behaviour, they analyze the host where they are based on.

- Endpoint Protection Platform (EPP): EPP are software agents systems tht performs multiple tasks such as Anti Virus, HIDS, firewall, DLP, etc.

- Endpoint Detection and Response (EDR): Software agent that collects logs from the system and can provide early detection of threats.

- User and Entity Behaviour Analytics (UEBA): System powered by Artificaial Intelligence models that can identify suspicious activity

Sandboxing

A sandbox is a computer enviroment isolated from the host system to guarantee that the enviroment is controlled and secured. This sandbox enviroment is usually a virtual machine and should not be used for any other purpose except malware analysis.

Reverse Engineering

Reverse engineering is the process of analyzing a product or system to understand its design, internal structure, and functionality in order to identify vulnerabilities, create compatible products, or understand how it works. It is commonly used in software, hardware, and malware analysis by security researchers, developers, and companies to improve their own products.

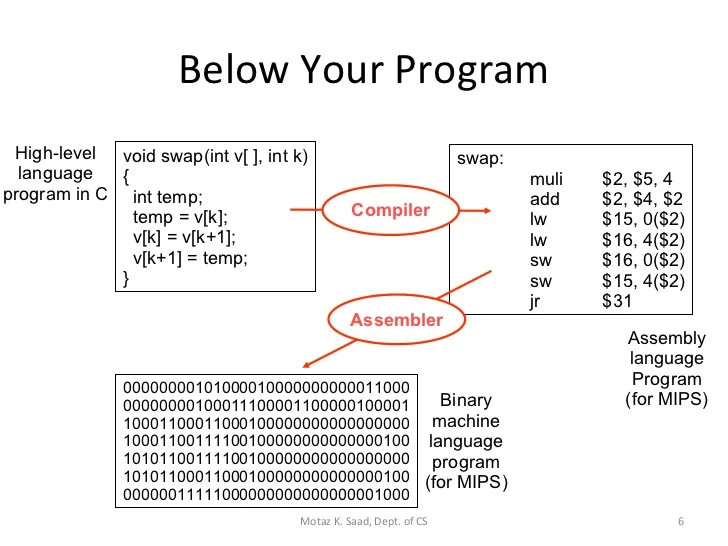

Malware writers often obfuscate the code before it is assembled or compiled to prevent analysis

In order to do malware reverse engineering, some skills and tools are required, like a dissasembler and a decompiler. A disassembler is a tool that takes machine code (i.e., the binary code that is executed by a computer) and converts it into assembly code. Assembly code is a low-level programming language that is specific to a particular architecture and is composed of instructions that are directly executed by the CPU. Disassemblers are used to examine the inner workings of a program, such as the instructions and data structures it uses, and to understand how it interacts with the operating system and other software. They are also used to debug the code and locate potential vulnerabilities.

A decompiler, on the other hand, is a tool that takes compiled code (i.e., machine code or bytecode) and converts it into a higher-level programming language, such as C or Java. The main objective of decompiling is to recover the source code that was used to create the compiled code. Decompilers are used to understand the logic of the code, the algorithms used, and the overall design of the software. They are also used to recover lost or missing source code.

Nice to know information

- A magic number is a special sequence of bytes that is used to identify the file format of a file. These sequences are also known as “file signatures” or “magic bytes”. They are typically located at the beginning of a file and are used by operating systems and applications to determine the type of file.

For example, a magic number for a PNG image file is 89 50 4E 47 0D 0A 1A 0A, which is the first eight bytes of the file. When a program or an operating system reads this sequence of bytes from the beginning of a file, it knows that this file is a PNG image. Similarly, the magic number for a ZIP file is 50 4B 03 04, which indicates that the file is a ZIP archive. We can find a great database of magic numbers here. PeID tool can help identifying the magic number of files and know what they really are by identifying the compiler/packer.

- A program packer is a utility used to compress and encrypt executable files in order to make them smaller and more difficult to reverse engineer. They are commonly used to protect software from piracy and to make it harder for attackers to find vulnerabilities by reverse engineering the code. Packers work by compressing and encrypting the file and include a small piece of code called the “unpacking stub” which decompresses and decrypts the file when it is run. Different packers use different algorithms and encryption methods and the security of a packed file depends on the strength of the encryption and compression algorithm used.

Malware Explotation

When talking about malware, the exploit technique is the specific method that the malware used to infect the host. They usually use a Dropper and a Downloader. The dropper is the malware designed to install or execute other malware embedded in a payload. The downloader is part of the code that connecs to the Internet to retreive additional tools after the initial infection by a dropper.

Code injection is a technique used by attackers to inject malicious code into a legitimate program or process to gain unauthorized access or perform malicious actions. There are several types of code injection attacks, such as buffer overflow, SQL injection, RCE, and DLL injection (Dynamic Link Library). These attacks can be used to gain access to sensitive information, steal data, install malware, or take control of the system. Mitigations include using secure coding practices, input validation and patching vulnerabilities in a timely manner. Masquerading is another technique used by attackers to make a malicious file or program appear as a legitimate one by modifying the file name, file extension or by adding a digital signature to the file.

A hollowed process is a technique used to create a new instance of a legitimate process and then replace the process’ memory with malicious code. The goal of this technique is to evade detection by security software by running the malicious code in the context of a legitimate process.

Behavioral Analysis

Behavioral-based techniques are used to identify infections by analyzing the behavior of a system or process, rather than relying on the code or signature of the malware. Some common behavioral-based techniques used to identify infections include:

- Anomaly detection: This technique compares the current behavior of a system or process to a known baseline, and any deviation from the baseline is flagged as suspicious.

- Signature-less detection: This technique uses machine learning models to identify patterns of behavior that are indicative of malware. It does not rely on the malware’s code or signature.

- Heuristics: This technique uses a set of rules or guidelines to identify suspicious behavior. For example, a process that attempts to access a sensitive file or registry key may be flagged as suspicious.

- Sandboxing: This technique runs the suspected malware in a controlled environment, such as a sandbox, where its behavior can be observed and analyzed.

- Behavioral monitoring: This technique monitors the system for suspicious activity, such as changes to the file system, registry, or network connections.

- Fileless malware detection: this technique detect malware that doesn’t write files on the disk, but it runs in memory, so it’s difficult to detect, but behavioral-based techniques can detect this type of malware.

Nice to know information

Windows Registry

The Windows Registry is a hierarchical database that stores configuration settings and options for the operating system and for applications that run on the Windows platform. It contains information such as user preferences, installed software, system settings, and hardware configurations. It is organized into keys and values and is used by the operating system and by applications to store and retrieve configuration information. However, it is important to be cautious when modifying the registry as incorrect modifications can cause system instability and crashes. It is recommended to backup the registry before making any changes.

You can view the regestry by using regedit.exe. When you open the regedit.exe utility to view the registry, the folders you see are Registry Keys. Registry Values are the data stored in these Registry Keys. A Registry Hive is a group of Keys, subkeys, and values stored in a single file on the disk.

Any Windows system contains the following root keys:

- HKEY_CURRENT_USER: Contains the root of the configuration information for the user who is currently logged on. The user’s folders, screen colors, and Control Panel settings are stored here. This information is associated with the user’s profile. This key is sometimes abbreviated as HKCU.

- HKEY_USERS: Contains all the actively loaded user profiles on the computer. HKEY_CURRENT_USER is a subkey of HKEY_USERS. HKEY_USERS is sometimes abbreviated as HKU.

- HKEY_LOCAL_MACHINE: Contains configuration information particular to the computer (for any user). This key is sometimes abbreviated as HKLM.

- HKEY_CLASSES_ROOT: The information that is stored here makes sure that the correct program opens when you open a file by using Windows Explorer. This key is sometimes abbreviated as HKCR.

- HKEY_CURRENT_CONFIG: Contains information about the hardware profile that is used by the local computer at system startup.

If you need to inspect the registry from a disk image, the majority of the hives are located in the C:\Windows\System32\Config directory. Other hives are stored under the C:\Users<username>:

- NTUSER.DAT (mounted on HKEY_CURRENT_USER when a user logs in)

- USRCLASS.DAT (mounted on HKEY_CURRENT_USER\Software\CLASSES) Apart from these files, there is another very important hive called the AmCache hive. This hive is located in C:\Windows\AppCompat\Programs\Amcache.hve. Windows creates this hive to save information on programs that were recently run on the system.

Some other very vital sources of forensic data are the registry transaction logs and backups. The transaction logs can be considered as the journal of the changelog of the registry hive. Windows often uses transaction logs when writing data to registry hives. The transaction log for each hive is stored as a .LOG file in the same directory as the hive itself.

There are a lot if important regestrys to bear in mind when performing a forensic activity, but some of the most important may be:

Network Interfaces and Past Networks: We can find this information in the

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Tcpip\Parameters\InterfacesEach Interface is represented with a unique identifier (GUID) subkey, which contains values relating to the interface’s TCP/IP configuration. This key will provide us with information like IP addresses, DHCP IP address and Subnet Mask, DNS Servers, and more. This information is significant because it helps you make sure that you are performing forensics on the machine that you are supposed to perform it on. The **past networks can be found in:HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows NT\CurrentVersion\NetworkList\Signatures\[Unmanaged|Managed]Autostart Programs (Autoruns): The following registry keys include information about programs or commands that run when a user logs on.

- HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\Run

- HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\RunOnce

- HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\RunOnce

- HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\policies\Explorer\Run

- HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Run

For the services, the regestry key is located at HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services

SAM and user information: The SAM hive contains user account information, login information, and group information. This information is mainly located in the following location:

HKEY_LOCAL_MACHINE\SAM\Domains\Account\UsersRecent Files: Windows maintains a list of recently opened files for each user. We can find this hive at

HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\Explorer\RecentDocsOffice also mantains a list of recently opened documents atHKEY_CURRENT_USER\Software\Microsoft\Office\<VERSION>\. Starting from Office 365, Microsoft now ties the location to the user’s live ID. In such a scenario, the recent files can be found at the following location.HKEY_CURRENT_USER\Software\Microsoft\Office\VERSION\UserMRU\LiveID_####\FileMRU.Evidence of Execution: Windows keeps track of applications launched by the user using Windows Explorer for statistical purposes in the User Assist registry keys. These keys contain information about the programs launched, the time of their launch, and the number of times they were executed. However, programs that were run using the command line can’t be found in the User Assist keys. We can find this information at

HKEY_CURRENT_USER\Software\Microsoft\Windows\Currentversion\Explorer\UserAssist\{GUID}\Count. As previously mentioned, the AmCache includes execution path, installation, execution and deletion times, and SHA1 hashes of the executed programs.

Background Activity Monitor or BAM keeps a tab on the activity of background applications. Similar Desktop Activity Moderator or DAM is a part of Microsoft Windows that optimizes the power consumption of the device. Both of these are a part of the Modern Standby system in Microsoft Windows. They are located at: - HKEY_LOCAL_MACHINESYSTEM\CurrentControlSet\Services\bam\UserSettings{SID} - HKEY_LOCAL_MACHINESYSTEM\CurrentControlSet\Services\dam\UserSettings{SID}

- External Devices: The following locations keep track of USB keys plugged into a system. These locations store the vendor id, product id, and version of the USB device plugged in and can be used to identify unique devices.

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Enum\USBSTORandHKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Enum\USB.

Similarly, the following registry key tracks the first time the device was connected, the last time it was connected and the last time the device was removed from the system.

HKEY_LOCAL_MACHINESYSTEM\CurrentControlSet\Enum\USBSTOR\Ven_Prod_Version\USBSerial#\Properties\{83da6326-97a6-4088-9453-a19231573b29}\#### In this key, we can change the #### by the following digits to get the required information:

| Value | Information |

|---|---|

| 0064 | First Connection time |

| 0066 | Last Connection time |

| 0067 | Last removal time |

The device name of the connected drive can be found at the following location:

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows Portable Devices\Devices

Sysinternals

The Sysinternals suite includes a variety of tools that can be used for system administration, troubleshooting, and security. Some of the most popular tools include. You can download all the tools or just the ones that you want here. You can use Sysinternals Live if you want to execute the tools from the web instead of downloading them. To do this you jus need to write \\live.sysinternals.com\tools\<toolname> to de command prompt (you need to be executing the WebDAV client service and Network Discovery).

Sigcheck

Command-line utility that shows file version number, timestamp information, and digital signature details, including certificate chains. For example, if we wanted to check for unsigned files in C:\Windoes\System32, we can use Sigcheck:

1

sigcheck -u -e c:\windows\system32 -accepteula

-e Scan executable images only (regardless of their extension) -u If VirusTotal check is enabled, show files that are unknown by VirusTotal or have non-zero detection, otherwise show only unsigned files. -accepteula Silently accept the Sigcheck EULA (no interactive prompt)

Streams

Alternate Data Streams (ADS) is a file attribute specific to Windows NTFS (New Technology File System). Every file has at least one data stream ($DATA) and ADS allows files to contain more than one stream of data. Natively Window Explorer doesn’t display ADS to the user. There are 3rd party executables that can be used to view this data, but Powershell gives you the ability to view ADS for files.

Let’s examplify this. In this example we have a file.txt which, if opened with a text editor, it only has the “”.

If we run streams file.txt we get this output:

1

2

3

4

5

6

7

8

C:\Users\Administrator\Desktop>streams file.txt

streams v1.60 - Reveal NTFS alternate streams.

Copyright (C) 2005-2016 Mark Russinovich

Sysinternals - www.sysinternals.com

C:\Users\Administrator\Desktop\file.txt:

:hidden.txt:$DATA 26

We can see that the file has two streams: hidden.txt and $DATA. Now, we can check what is in the hidden.txt:

1

2

C:\Users\Administrator\Desktop>more < file.txt:hidden.txt

I am hiding in the stream.

However, if we don’t specify the stream or we just open the file, we will see the other data (the $DATA stream).

1

2

C:\Users\Administrator\Desktop>more < file.txt

I'm in the DATA stream

SDelete

SDelete is a command line utility that takes a number of options. In any given use, it allows you to delete one or more files and/or directories, or to cleanse the free space on a logical disk. SDelete implements the DoD 5220.22-M data sanitization method, which does:

- Writes zero and verifies de write

- Writes one and verifies the write

- Writes a random character and verifies the write

TCPView

TCPView is a Windows program that will show you detailed listings of all TCP and UDP endpoints on your system, including the local and remote addresses and state of TCP connections. The TCPView download includes Tcpvcon, a command-line version with the same functionality.

Windows already has a builtin tool called Resource Monitor (resmon) that provides the same functionality.

Autoruns

This utility shows you what programs are configured to run during system bootup or login, and when you start various built-in Windows applications like Internet Explorer, Explorer and media players. This is a good tool to search for any malicious entries created in the local machine to establish Persistence

ProcDump

ProcDump is a command-line utility whose primary purpose is monitoring an application for CPU spikes and generating crash dumps during a spike that an administrator or developer can use to determine the cause of the spike.

Process Explorer

The Process Explorer display consists of two sub-windows. The top window always shows a list of the currently active processes, including the names of their owning accounts, whereas the information displayed in the bottom window depends on the mode that Process Explorer is in: if it is in handle mode you’ll see the handles that the process selected in the top window has opened; if Process Explorer is in DLL mode you’ll see the DLLs and memory-mapped files that the process has loaded.

Process Monitor

Process Monitor is an advanced monitoring tool for Windows that shows real-time file system, Registry and process/thread activity. It combines the features of two legacy Sysinternals utilities, Filemon and Regmon, and adds an extensive list of enhancements including rich and non-destructive filtering, comprehensive event properties such as session IDs and user names, reliable process information, full thread stacks with integrated symbol support for each operation, simultaneous logging to a file, and much more. Its uniquely powerful features will make Process Monitor a core utility in your system troubleshooting and malware hunting toolkit. To use ProcMon effectively you must use the Filter and must configure it properly.

PsExec

PsExec is a light-weight telnet-replacement that lets you execute processes on other systems, complete with full interactivity for console applications, without having to manually install client software. PsExec’s most powerful uses include launching interactive command-prompts on remote systems and remote-enabling tools like IpConfig that otherwise do not have the ability to show information about remote systems.

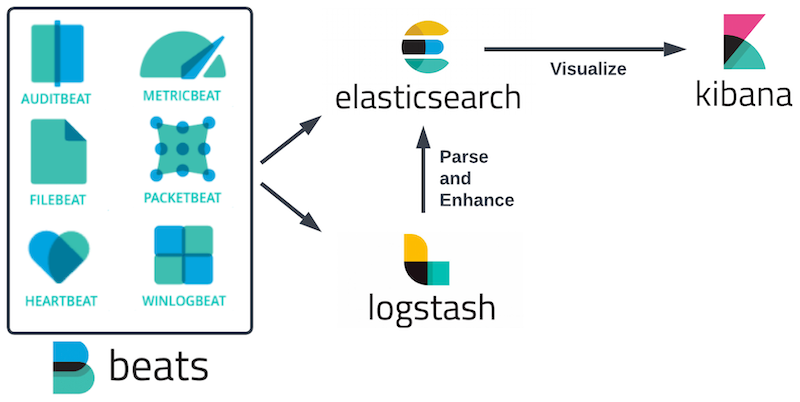

Sysmon

System Monitor (Sysmon) is a Windows system service and device driver that, once installed on a system, remains resident across system reboots to monitor and log system activity to the Windows event log. It provides detailed information about process creations, network connections, and changes to file creation time. By collecting the events it generates using Windows Event Collection or SIEM agents and subsequently analyzing them, you can identify malicious or anomalous activity and understand how intruders and malware operate on your network.

Sysmon requires a config file in order to tell the binary how to analyze the events that it is receiving. You can create your own Sysmon config or you can download a config. Sysmon includes 29 different types of Event IDs, all of which can be used within the config to specify how the events should be handled and analyzed. Some of the most important are:

- Event ID 1: Process Creation: This event will look for any processes that have been created. You can use this to look for known suspicious processes or processes with typos that would be considered an anomaly

Event ID 3: Network Connection: The network connection event will look for events that occur remotely. This will include files and sources of suspicious binaries as well as opened ports.

Event ID 7: Image Loaded: This event will look for DLLs loaded by processes, which is useful when hunting for DLL Injection and DLL Hijacking attacks. It is recommended to exercise caution when using this Event ID as it causes a high system load.

Event ID 8: CreateRemote Thread: The CreateRemoteThread Event ID will monitor for processes injecting code into other processes. The CreateRemoteThread function is used for legitimate tasks and applications. However, it could be used by malware to hide malicious activity.

- Event ID 11: File Created: This event ID is will log events when files are created or overwritten the endpoint. This could be used to identify file names and signatures of files that are written to disk.

Event ID 12 / 13 / 14: Registry Event: This event looks for changes or modifications to the registry. Malicious activity from the registry can include persistence and credential abuse.

Event ID 15: Event ID 15: FileCreateStreamHash: This event will look for any files created in an alternate data stream. This is a common technique used by adversaries to hide malware.

- Event ID 22: DNS Event: This event will log all DNS queries and events for analysis. The most common way to deal with these events is to exclude all trusted domains that you know will be very common “noise” in your environment. Once you get rid of the noise you can then look for DNS anomalies.

When you execute sysmon, you need to specify the config file, for example, if we downloaded the config fole from the SwiftOnSecurity:

1

Sysmon.exe -accepteula -i ..\Configuration\swift.xml

Once sysmon is executing, we can look at the Event Viewer to monitor events.

WinObj

WinObj is a 32-bit Windows NT program that uses the native Windows NT API (provided by NTDLL.DLL) to access and display information on the NT Object Manager’s name space. Winobj is particularly useful for developers, system administrators, and advanced users who need to understand and troubleshoot the internal workings of the Windows operating system. It provides a comprehensive view of the objects present in the system, their relationships, and properties.

BgInfo

Winobj is particularly useful for developers, system administrators, and advanced users who need to understand and troubleshoot the internal workings of the Windows operating system. It provides a comprehensive view of the objects present in the system, their relationships, and properties.

RegJump

Using Regjump will open the Registry Editor and automatically open the editor directly at the path, so one doesn’t need to navigate it manually. You have to provide the registry path.

Strings

Strings just scans the file you pass it for UNICODE (or ASCII) strings of a default length of 3 or more UNICODE (or ASCII) characters.

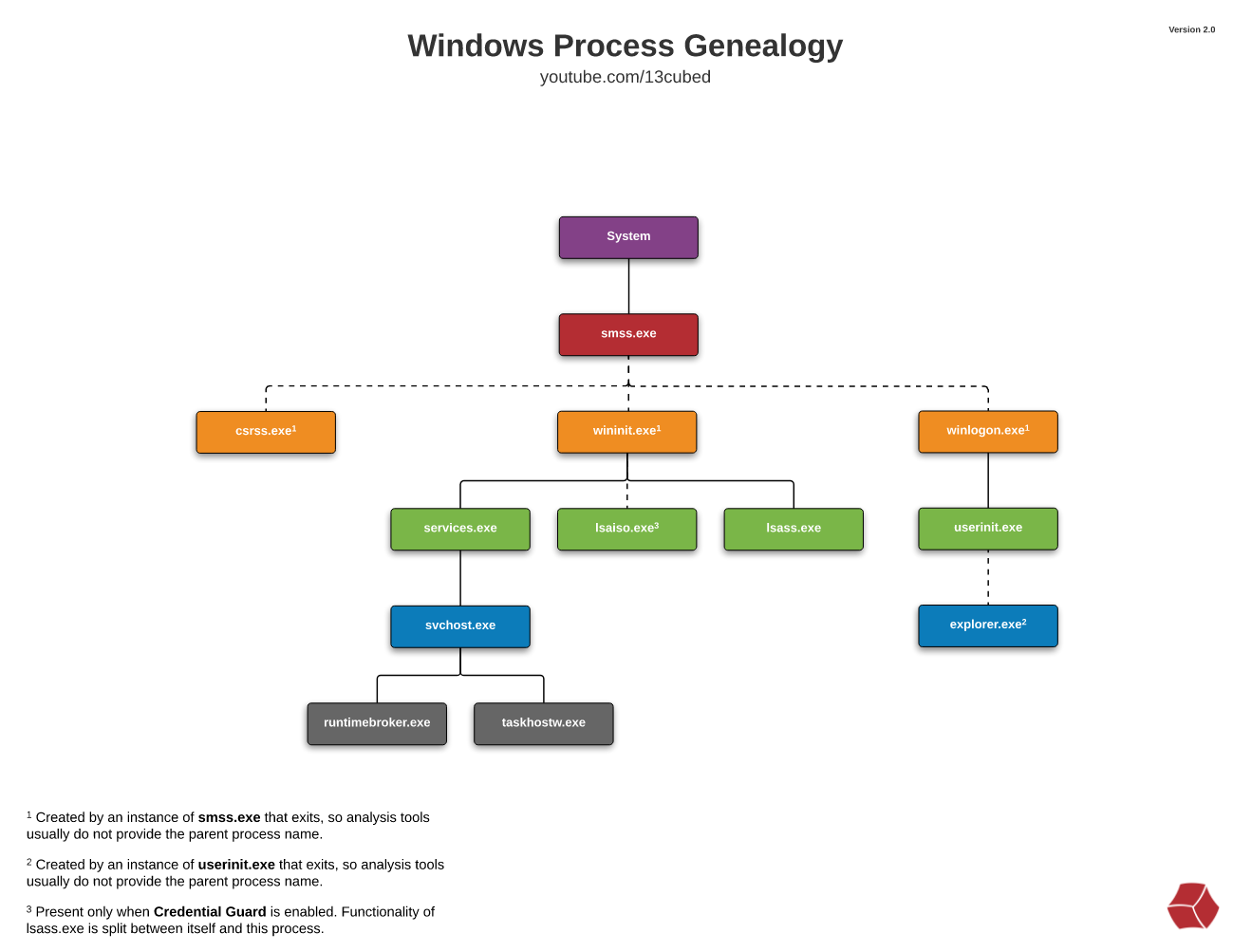

Windows Process Genealogy

System Idle and System: PID 0 is assigned to the System Idle Process, a special process that runs when the computer has no other tasks to perform and consumes any unused CPU cycles. It is used by the operating system to measure the amount of idle time. PID 4 is assigned to the System process, a special process that runs at the highest privilege level and provides system-level services such as managing system resources and creating/terminating other system processes. Both processes are critical for the proper functioning of the operating system and should not be terminated or interfered with.

Session Manager Subsystem: smss.exe is the process responsible for creating new sessions. A session consists of all of the processes and other system objects that represent a single user’s logon session. Session 0 is an isolated Windows session for the operating System. When smss.exe starts, it creates copies of itself, one of that copy creates csrss.exe and wininit.exe in Session 0 and self-terminates. Another copy creates csrss.exe and winlogon.exe for Session 1 (user session) and self-terminates. If more subsistems are defined in the registry (HKLM\System\CurrentControlSet\Control\Session Manager\Subsystems), they will be launched too. smss.exe also creates environment variables and virtual memory paging files.

Clientt Server Runtime SubSystem: csrss.exe (Client/Server Runtime Server Subsystem) is a legitimate system process that is responsible for managing certain aspects of the Windows operating system, such as creating and deleting threads and managing the Windows subsystem. It is essential for the proper functioning of the operating system and runs as a background process with minimal impact on system performance. However, malware can also use the same name for their malicious processes, so it is important to verify the location (C:\Windows\System32\csrss.exe) and the signature of the process. Terminating or interfering with the csrss.exe process can cause instability and crashes in the operating system, it is not recommended. This process is responsible for the Win32 console window and process thread creation and deletion.

WININIT: winit.exe (Windows Initialization Process), is responsible for launching services.exe (Service Control Manager), lsass.exe (Local Security Authority), and lsaiso.exe within Session 0 (kernel session). It is another critical Windows process that runs in the background, along with its child processes. lsaiso.exe is a process associated with Credential Guard and KeyGuard. You will only see this process if Credential Guard is enabled. Is responsible for initializing the Windows session, managing system services, initializing user profiles, and coordinating the startup and shutdown processes. It is a crucial component that ensures a smooth and controlled system startup and shutdown experience.

Services.exe: services.exe is a Windows process that is responsible for managing system services in the operating system. It is a legitimate system process and it is located in the C:\Windows\System32 folder. services.exe is responsible for starting, stopping, and controlling the status of services on the computer. Services are background processes that run on a computer, they perform a variety of tasks such as managing the network, security, or hardware. Services can be configured to start automatically when the system boots, or they can be started and stopped manually. You can see the services stored in the registry at HKLM\System\CurrentControlSet\Services. Services will be started by the SYSTEM, LOCAL SERVICE, or NETWORK SERVICE accounts. Only 1 instance should be running on a Windows system.

svchost.exe: Service Host is responsible for hosting and managing Windows services. It’s parent process is services.exe. The svchost.exe process hosts multiple services that require specific DLLs for functionality. Instead of duplicating DLLs in memory for each service, svchost.exe enables DLL sharing, conserving system resources and reducing memory usage. The DLLs loaded in svchost.exe are related to the hosted services, providing necessary functions and routines. Svchost.exe acts as a container, allowing multiple services to access and utilize these shared DLLs. Is common to have several instances of svchost.exe, but they should have the -k parameter. The -k parameter is for grouping similar services to share the same process.

Local Security Authority SubSystem: lsass.exe (Local Security Authority Subsystem Service) is a Windows process that is responsible for enforcing the security policy on the computer, it is responsible for managing user authentication and authorization, as well as providing security to the system by validating user credentials and managing access to system resources. It should only have one instance and it has to be a child of wininit.exe. It creates security tokens for SAM (Security Account Manager), AD (Active Directory), and NETLOGON. It uses authentication packages specified in HKLM\System\CurrentControlSet\Control\Lsa.

WINLOGON: winlogon.exe (Windows Logon Application) is a process in the Windows operating system that is responsible for managing the logon and logoff process for a user, it is the process responsible for showing the user the logon screen, where the user inputs their credentials, and it also responsible for loading the user profile and starting the shell (explorer.exe) after a successful logon. This process is executed in Session 1.

USERINIT: userinit.exe is a Windows process that is responsible for initializing the user profile when a user logs on to the system. This process is responsible for starting the Windows shell (explorer.exe) and running any startup programs specified in the user’s profile. You should only see this process briefly after log-on.

Explorer: explorer.exe is the Windows process that is responsible for providing the graphical user interface (GUI) for the operating system. It creates and manages the taskbar, start menu, and desktop, and it also manages the file explorer and other shell components of the operating system. Winlogon process runs userinit.exe, which launches the value in HKLM\Software\Microsoft\Windows NT\CurrentVersion\Winlogon\Shell. Userinit.exe exits after spawning explorer.exe. Because of this, the parent process is non-existent.

EDR Configuration

When using a Endpoint Detection Response, is important to tune it to reduce false positives. An otganitzation canuse online tools like virustotal.com to verify if a url or file has been categorized as malware by other Anti Virus, or may create custom malware signatures or detection rules using:

Malware Attribute Enumeration and Characterization (MAEC) Scheme: It is a standardized language for sharing structured information about malware that is complementary to STIX and TAXII to improve the automated sharing of threat intelligence. MAEC is often used in conjunction with the STIX (Structured Threat Information eXpression) and TAXII (Trusted Automated eXchange of Indicator Information) standards.

Yara: Yara is a tool and file format that allows users to create simple descriptions of the characteristics of malware, called “Yara rules”, that can be used to identify and classify malware samples. Yara rules are written in a simple language that allows users to define the characteristics of malware based on code, function, behavior, or other attributes. They are composed of a rule header and a rule body. The header includes the rule name and description, while the body includes conditions that must be met for the rule to match a given file. These conditions include properties of the file and the code. It is widely used by security researchers and incident responders for identifying and classifying malware. For example, a Yara rule that detects a specific malware family could look like this:

1 2 3 4 5 6 7 8

rule WannaCry_Ransomware { strings: $WannaCry_string1 = "WannaCry" $WannaCry_string2 = "WannaDecryptor" condition: all of them }

To secure an endpoint, a Execution Control tool that determines what additional software may be installed on a client or server beyond its baseline can be used.

- Execution control in Windows:

- Software Restriction Policies (SRP)

- AppLocker

- Windows Defender Application Control (WDAC)

- Execution control in Linux:

- Mandatory Access Control (MAC)

- Linux Security Module (LSM): An LSM provides a set of hooks and APIs that are integrated into the Linux kernel, and allows for the registration of security modules. These modules can be used to implement different security models, such as access control lists (ACLs), role-based access control (RBAC), and mandatory access control (MAC). Some examples of LSMs include:

- AppArmor: A MAC system that uses profiles to define the allowed actions for a specific program or user.

- SELinux: A MAC system that uses security contexts to define the allowed actions for a specific process or file.

Email Monitoring

Indicators Of Compromise

Spam: unsolicited, bulk email messages that are sent to a large number of recipients. The messages are often commercial in nature and can be used to promote products or services, but can also be used to spread malware or phishing attempts.

Phishing: type of social engineering attack that aims to trick individuals into giving away sensitive information, such as passwords or credit card numbers. These attacks are typically carried out through email or instant messaging and often involve links to fake websites or attachments that are designed to steal personal information.

Pretext: a form of social engineering in which an attacker creates a false identity or scenario in order to gain access to sensitive information or resources. This can include creating a fake company or organization, or pretending to be a legitimate person or entity.

Spear phishing: is a targeted form of phishing that is directed at specific individuals or organizations. This type of attack is more sophisticated than regular phishing, as the attackers will often research their target in advance in order to make the message appear more legitimate.

Impersonation: is a form of social engineering in which an attacker pretends to be someone else in order to gain access to sensitive information or resources.

Business Email Compromise (BEC): is a type of scam in which an attacker uses social engineering techniques to trick an employee into transferring money or sensitive information to the attacker, or to a third party. This type of scam is often directed at businesses and organizations, and is typically accomplished through spear-phishing or impersonation.

Email Header Analysis

An email header is a collection of fields that contain information about the origin, routing, and destination of an email message. The header is typically located at the top of the email message, before the body of the message. The following are some of the most common fields found in an email header:

- From: this field is displayed to the recipient when they receive an email. It typically contains the name and email address of the sender that the recipient sees. This field is often used by email clients to display the sender’s name in the inbox, and can be easily manipulated by attackers to make the email appear to be from a legitimate source.

- Return Path: also known as Envelope From, is the field that contains the email address that the message was sent from and that the receiving mail server uses to identify the sender. This field is used to identify the source of the email and to determine where to send delivery notifications and bounce messages. The Envelope From header is not displayed to the recipient, but it is stored in the email header as a technical information and can be visible to anyone who has access to the email header.

- Received: also known as the “Received From” or “Received By” header, is a field that is added to an email message as it is passed from one mail server to another. This field contains information about the routing of the message, including the IP address of the server that received the message, the date and time that the message was received, and the IP address of the server that the message was sent from.

- Return-Path: This field contains the email address that the message should be returned to if it cannot be delivered.

- Received-SPF: This field contains information about the SPF (Sender Policy Framework) check that was performed on the message.

- Authentication-Results: This field contains information about the authentication of the message, including the results of any DKIM (DomainKeys Identified Mail) or DMARC (Domain-based Message Authentication, Reporting & Conformance) checks that were performed.

“X headers” refer to any header field that begins with the letter “X”, followed by a hyphen. These headers are also known as “extended headers” or “non-standard headers”. They are not a part of the standard email protocol (such as SMTP) but they can be added by email clients, servers or other intermediaries to provide additional information or functionality.

- X-Originating-IP: this field contains the IP address of the computer that sent the message.

- X-Mailer: this field contains the software that was used to compose the email message.

- User-Agent: this field contains information about the email client that was used to send the message.

- MIME-Version: this field contains the version of Multipurpose Internet Mail Extensions (MIME) that was used to format the email message.

MIME is an extension protocol that allows emails to carry multimedia content such as images, audio, and video, as well as text in character sets other than ASCII. MIME defines a set of headers that can be used to specify the type of content in an email message, as well as how it should be displayed or handled by the recipient’s email client.

- Content-Type: this field contains information about the format of the message, such as whether it is plain text or HTML.

Email Content Analysis

An attacker could craft a malicious payload in the email to exploit the victim when opens the message. It could be a exploit inside the email body that triggers a vulnerability in the email client, or a malicious Attachment that contains malicious code when it is executed/opened. Moreover, it could contain embeded links that could redirect to a malicious webpage and exploit web vulnerabilities.

Email Server Security

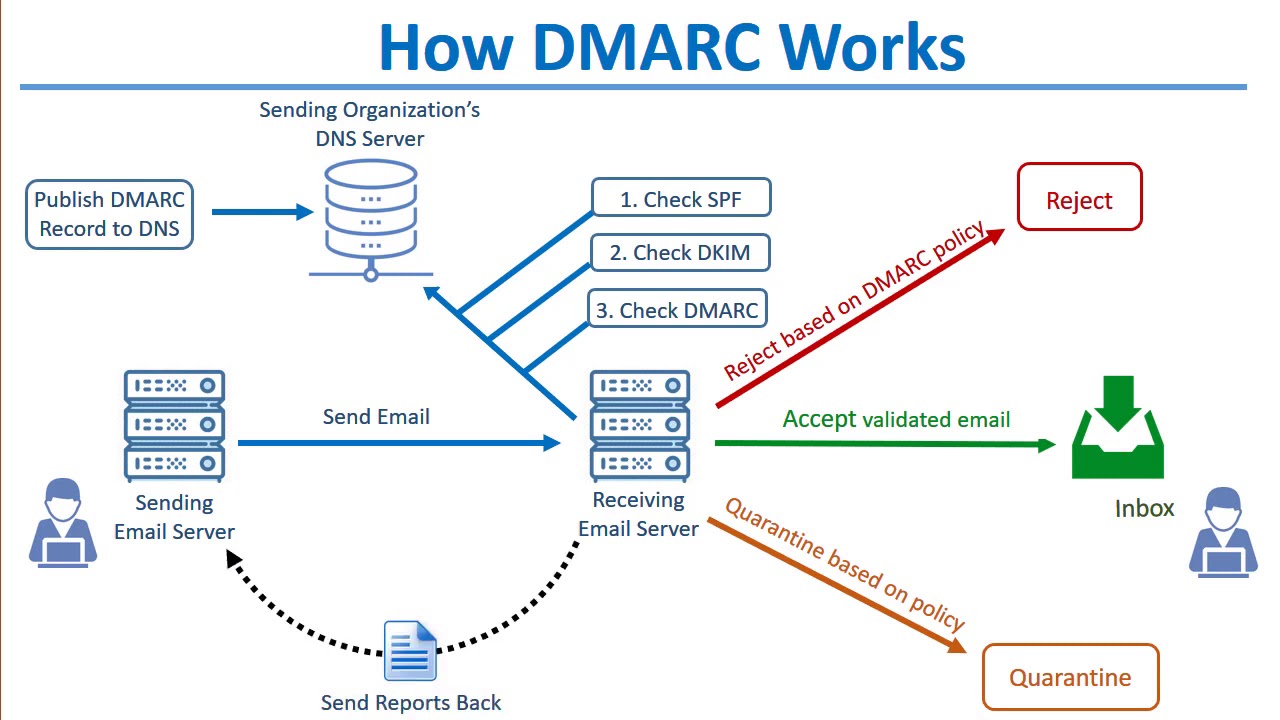

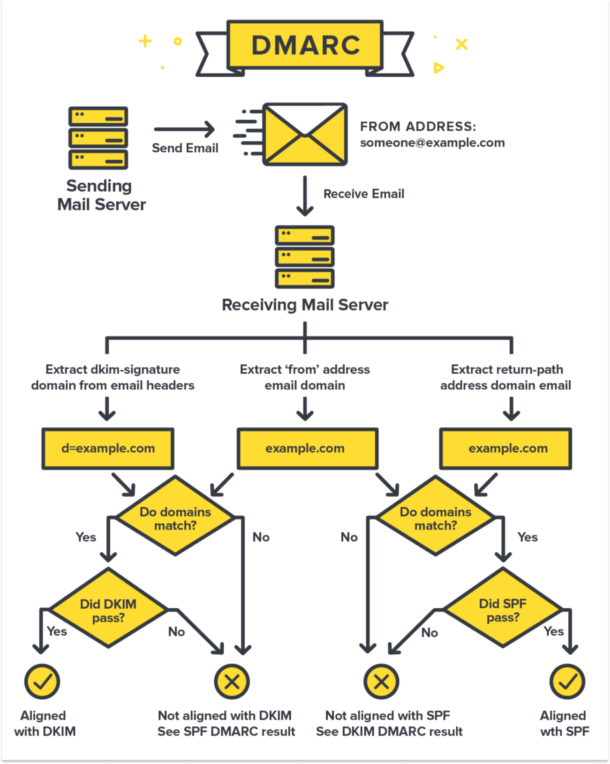

The best way to mitigate spoofing email attacks is to configure authentication for eamil server systems. This implies using SPF,DKIM and DMARC.

- Sender Policy Framework (SPF): An SPF record is a type of DNS (Domain Name System) record that is published in the domain’s DNS zone and it specifies which mail servers are authorized to send email on behalf of that domain. When an email message is received, the receiving mail server can check the SPF record for the domain in the message’s “From” address and compare it to the IP address that the message was received from. If the IP address does not match any of the authorized mail servers listed in the SPF record, the message can be rejected or flagged as potentially fraudulent.

This record states that email sent from IP addresses in the range of 192.0.2.0 to 192.0.2.255 and 198.51.100.123 are authorized to send email on behalf of example.com. The “~all” at the end of the record means that any email that fails the check should be marked as soft fail, which means that the email will be accepted but it could be flagged as potentially suspicious.

1

example.com. IN TXT "v=spf1 ip4:192.0.2.0/24 ip4:198.51.100.123 ~all"

This other example states that any server that has an IP address that matches the A or MX records for the domain example.com is authorized to send email from that domain, as well as any server that is included in the _spf.google.com domain’s SPF record (In other words, this spf record will trust the servers that the google spf record trust).

1

example.com. IN TXT "v=spf1 a mx include:_spf.google.com ~all"

- Domain Keys Identified Mail (DKIM): It allows the person receiving the email to check that it was actually sent by the domain it claims to be sent from and that it hasn’t been modified during transit. In DKIM, a domain owner creates a public/private key pair and publishes the public key in the domain’s DNS zone. When an email is sent from that domain, the sending server signs certain headers and the body of the email using the private key. The recipient’s mail server can then retrieve the public key from the DNS zone and use it to verify the digital signature on the email (the signature contains information such as signature’s algorithm, domain being claimed and the selector which is used to find the public key. If the signature is valid, it indicates that the email was sent by an authorized server for the domain and that the email has not been modified in transit.

1 2 3 4

DKIM-Signature: v=1; a=rsa-sha256; c=relaxed/relaxed; d=example.com; s=dkim; h=mime-version:from:date:message-id:subject:to; bh=1234567890abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ; b=1234567890abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQ

The fields of the signature example imply:

- “v=1” is the version of the DKIM protocol being used.

- “a=rsa-sha256” is the algorithm used to generate the digital signature. In this case, it is RSA with a SHA-256 hash.

- “c=relaxed/relaxed” specifies the canonicalization algorithm used for the headers and the body of the email. “relaxed” means that the email headers and body can be modified slightly without invalidating the signature.

- “d=example.com” is the domain being claimed by the email.

- ”s=dkim” is the selector. A selector is used to indicate which specific public key should be used to verify the signature. This allows an organization to use multiple keys for different purposes.

- “h=mime-version:from:date:message-id:subject:to” specifies which headers were included in the signature.

- “bh=1234567890abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ” is the body hash, which is a hash of the body of the email.

- “b=1234567890abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ” is the digital signature of the email, which is generated using the private key of the domain.

- Domain-Based Message Authentication, Reporting and Conformance (DMARC): This protocol works on top of DKIM or SPF and provides the domain owners a way to publish a policy in their DNS that specifies which mechanism(s) (SPF and/or DKIM) are used to authenticate email messages sent from their domain, and what the receiving mail servers should do if none of these mechanisms pass the check. DMARC also enables a reporting mechanism that allows the domain owner to receive feedback about the messages sent from their domain, including the number of messages that passed or failed DMARC evaluation, and the actions that receiving servers took on the messages. This allows domain owners to monitor the use of their domain and to detect any unauthorized use.

In DMARC, the alignment policy refers to the mechanism by which the domain used in the “From” address of an email message is compared to the domain used in the email’s SPF (Sender Policy Framework) or DKIM (DomainKeys Identified Mail) authentication mechanisms. The goal of the alignment policy is to ensure that the domain in the “From” address is the same or a subdomain of the domain used in the authentication mechanisms.

There are two types of alignment in DMARC: relaxed and strict. - Relaxed alignment: In relaxed alignment, the domain used in the “From” address of the email message can be a subdomain of the domain used in the authentication mechanisms. For example, if an email is sent from “newsletter.example.com” and its SPF or DKIM authentication mechanisms are aligned with “example.com”, the DMARC result is considered “aligned” (relaxed) - Strict alignment: In strict alignment, the domain used in the “From” address of the email message must be exactly the same as the domain used in the authentication mechanisms. For example, if an email is sent from “newsletter.example.com” and its SPF or DKIM authentication mechanisms are aligned with “newsletter.example.com”, the DMARC result is considered “aligned” (strict)

1

_dmarc.example.com. IN TXT "v=DMARC1; p=quarantine; pct=25; adkim=r; aspf=s; rua=mailto:dmarc-reports@example.com; ruf=mailto:forensic-reports@example.com; sp=reject; fo=1; rf=afrf"

This policy specifies the following: - “v=DMARC1” is the version of the DMARC protocol being used. - “p=quarantine” specifies that email messages that fail DMARC evaluation should be quarantined (delivered to the spam or junk folder) - “pct=25” specifies that 25% of email messages that fail DMARC evaluation should be subject to the “p” policy. The rest will be evaluated according to the receiving server’s local policy. - “adkim=r” is using relaxed alignment mode for DKIM, meaning that the domain used in the DKIM signature can be a subdomain of the domain in the “From” address - “aspf=s” is using strict alignment mode for SPF, meaning that the domain used in the SPF check must be exactly the same as the domain in the “From” address - “rua=mailto:dmarc-reports@example.com” specifies the email address to which aggregate reports (daily or weekly) should be sent. - “ruf=mailto:forensic-reports@example.com” specifies the email address to which forensic reports should be sent. - “sp=reject” specifies that email messages that fail SPF evaluation should be rejected. - “fo=1” specifies that the receiving server should generate a forensic report for any message that fails DMARC evaluation. - “rf=afrf” specifies the format of the forensic reports (Authentication-Results Feedback Format)

SMTP Log Analysis

SMTP Logs are formatted in the reques/response way:

- Time of request/response

- Address of the recipient

- Size of message

- Status code

Some important Satus code in SMTP are:

| Status Code | Explanation |

|---|---|

| 220 | Service ready - The server is ready to accept a new message |

| 250 | Requested action completed successfully - The server has successfully completed the requested action |

| 421 | Service not available, closing transmission channel - The server is not available and is closing the connection |

| 451 | Requested action aborted: local error in processing - The server was unable to process the request due to a local error |

| 452 | Requested action not taken: insufficient system storage - The server was unable to complete the requested action because there is not enough storage space available |

S/MIME